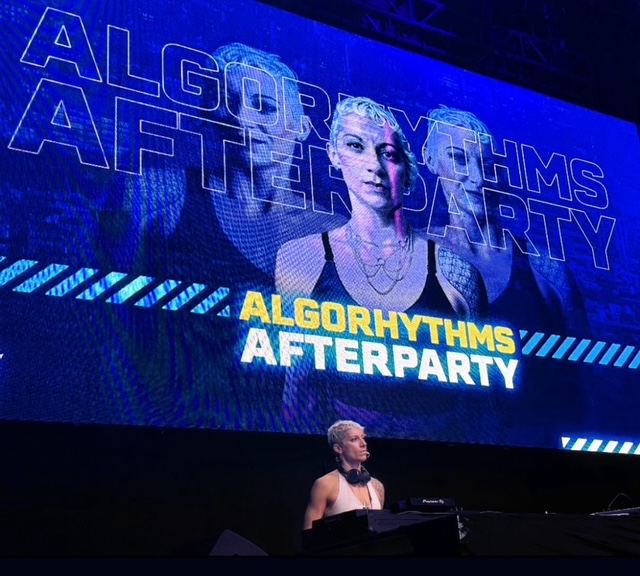

I had the privilege of attending, speaking, and DJing at the first ever SEO Week conference, held by iPullRank in New York City. SEO Week was the longest and most comprehensive SEO event I’ve ever attended (and I’ve been to many) – there were 4 full days of cutting-edge talks from 40 incredible speakers. While I wasn’t able to attend all 40 talks, I did my best to summarize some of the key points from the incredible talks I was able to attend:

1. The Rise of the Agentic Internet

James Cadwallader (CEO, Profound) introduced the concept of the “Agentic Internet,” a world where humans no longer interact directly with websites but instead send agents on their behalf. These agents, powered by LLMs, retrieve and synthesize information from the web, often without the user ever needing to click on the source. This theory leads to a “zero-click future” and fundamentally shifts SEO’s focus from user engagement to agent engagement.

James emphasized that SEO professionals must shift toward optimizing the Agent Experience (AX). He predicts that AX will become the new UX. Agents have different priorities than humans; they prefer structured data, semantic URLs, metadata, and comparative listicles. Many don’t currently render JavaScript, prioritize backlinks, or reward flashy web design. As agents become the first-class citizens of the internet, James predicted that AX will become the most critical function in marketing.

He also discussed Shopify’s brand new integration with ChatGPT, the growing dominance of answer engines, and the risk that brands may lose direct user relationships as agents “steal” the end user.

2. AI, Vector Embeddings, and Semantic Search

Mike King, my good friend and the CEO and founder of iPullRank (as well as the vision behind the SEO Week conference), provided a deep dive into the mechanics of modern AI-driven search. He explained how vector embeddings represent words as coordinates in a linear space. Concepts that are semantically related are physically close together in this space, and cosine similarity is used to measure their relatedness (with scores close to 1 being highly relevant). Mike explained that many SEO tools are stuck using the “lexical model,” which is an outdated way of approaching SEO, given that search algorithms have largely shifted toward using a semantic model.

Mike explained that algorithms use embeddings to cluster content, enhance internal linking, and calculate topical relevance. This allows for a more nuanced understanding of user queries – for example, associating “NYC” and “New York City” or distinguishing between “Apple” the fruit and “Apple” the brand.

Mike introduced the concept of relevance engineering, a fusion of information retrieval, UX, and digital PR. (I noticed that he also included “relevance engineering” in the billboard he put up in for the event in Times Square.) He criticized current SEO tools for not embracing these scientific methodologies and emphasized the need for reproducibility in SEO experimentation. For example, he noted that many SEO best practices recommend using short title tags, but highlighted that his agency’s tests revealed that clicks increase when GSC keywords are appended to titles.

He described the shift from traditional inverted indexes to hybrid retrieval systems that combine lexical search with vector-based approaches, noting that query expansion and fanout allow engines to anticipate user intent beyond the typed query.

3. AI Overviews (AIO) and Retrieval-Augmented Generation (RAG)

Mike King reported that approximately 20% of Google queries now include AI Overviews (AIOs) – although I have seen various studies indicating that the number is much higher now, and increasing all the time. AI Overviews (AIO) are powered by Retrieval-Augmented Generation (RAG), where pages are chunked semantically and indexed so that LLMs can retrieve and synthesize passages in response to queries.

Mike detailed the steps in RAG: tokenizing content, indexing it, chunking based on logical boundaries, and feeding relevant passages into the generative model. He noted that AIO uses a different model than standard results and also pointed out that the product is quickly improving. He also indicated that citations in AIOs can be extracted and analyzed for cosine similarity.

Crystal Carter (Head of SEO, Wix) provided an extensive overview of the various large language models, with a focus on the groundbreaking Chinese LLM, Deepseek. Crystal noted that structured data can influence what content is ingested and retrieved in Deepseek. Similarly, Krishna Madhavan (Microsoft) reinforced that factual, clear writing – especially using semantic triples and unambiguous stats – boosts content’s chances of inclusion in large language models.

However, it was refreshing to hear an alternative perspective from renowned Chilean computer scientist, Ricardo Baeza Yates. Ricardo raised ethical and technical concerns about RAG. He called it a “bad patch” for language model shortcomings, such as factual inaccuracies, and warned that it can amplify mediocre content due to its indiscriminate retrieval. He also flagged hallucination risks and the tendency of LLMs to reflect biases in training data.

Personally, I was thrilled to hear this perspective from Ricardo (who proceeded to spend the day with the SEO community, both at the conference and at the SEOFOMO meetup later in the day), who kept us in check and reminded us of the limiations of LLMs, as SEOs shared the many ways they are leveraging AI in their work.

4. Trust, Outcomes, and Topical Authority

Jeff Coyle (cofounder of MarketMuse) emphasized that AI evaluates success based on outcomes, not clicks. Algorithms prioritize truly helpful, original, and trustworthy content. Evaluation now happens at the page and site level, meaning every piece of content must earn its place. Citing sources, being authoritative, and offering statistics are factors that can help content appear in AI Overviews.

Manick Bhan (founder of SearchAtlas) supported this with findings that topical relevance, not domain power, correlates best with rankings. Manick introduced visualizations for topical vectors and emphasized reducing a site’s radius by pruning off-topic content. He also pointed out that LLMs are US-centric even in global analyses.

Carrie Rose (founder & CEO, Rise at Seven) gave powerful case studies on brand salience and how to improve salience for specific categories. Red Bull failed to rank for “energy drinks for gamers” because its competitors were gaming websites, not beverage brands. Similarly, Ninja couldn’t rank for TikTok air fryer recipes despite strong brand presence. She advocated engineering category signals, especially by directing links to category pages, and following the ‘7/11/4 model’ – 7 hours, 11 interactions, 4 platforms – to build brand memory.

Carrie also contrasted homepage-heavy link profiles with those targeting commercial pages. She recommended building links directly to category URLs, using contextual mentions on media sites, and pitching original research to journalists with links back to targeted category pages.

5. Technical SEO and Data Collaboration

Elias Dabbas (Owner, The Media Supermarket) demonstrated how to use Advertools in Python to parse robots.txt, extract sitemaps, and convert data into usable frames. He explained core programming functions in Jupyter notebooks and stressed their role in scalable technical SEO.

Jori Ford (Chief Marketing & Product Officer at FoodBoss) examined bot behavior using Cloudflare logs, identifying 226 distinct bot crawlers. She recommended tagging meaningful bots, using Looker dashboards to visualize crawl overlaps, and creating “answer assets” for LLMs. Jori highlighted that high bot activity with low user clicks indicates a missed optimization opportunity.

She also warned about model contamination, where models score unusually well after training on evaluation data. She advocated using leaderboard dashboards to test LLM outputs and being “obnoxiously helpful” with tooltips and inline explanations.

6. Getting Content into LLMs

Manick Bhan also suggested that in order to appear in LLM-generated answers, your content must be part of their training corpus. Common Crawl is the most accessible source. Manick recommended publishing guest posts on crawl-indexed domains, using platforms like WhitePress and SignalGenesys, or distributing content via PR Newswire.

Optimizing branded assets like ‘About’ pages and blogs, getting indexed in Bing results, and checking inclusion via Gemini were all recommended. Jori Ford encouraged SEOs to actively engage with LLMs about their own content, nudging it into relevance.

My own sidenote: this is becoming an increasingly common way to both influence and spam LLM outputs – by repeatedly ‘telling’ them the information you want to hear, and/or excessively using the feedback button to influence responses.

Dan Petrovik (Director of DEJAN Marketing) discussed how to set up tracking in LLMs and how LLMs perceive brands and entities. Dejan covered bidirectional querying of LLMs to understand the relationship between brands and entities – seeing which brands are associated with specific entities, and which entities are associated with which brands. His talk mentioned factors like stochastic sampling, fine tuning, model releases, and grounding as influences on outputs, relevant for building an LLM tracker, and touched on tracking entities over time, bidirectional querying, and identifying attention hotspots. These hotspots indicate the strength of how the model understands the langauge surrounding brands and entities – basically, what the model thinks about your brand.

7. Generative AI: Criticisms and Limitations

Ricardo Baeza Yates flagged several issues with generative models: linguistic inequality, algorithmic bias, limited transparency, and lack of content diversity. Baeza Yates cited an example where a chatbot falsely declared that he was dead (hey, something similar recently happened with my name, too!) and noted that LLMs rarely disclose errors.

Bianca Anderson (Manager of Organic Growth at hims and hers) cautioned that visibility doesn’t equal value and that AI Overviews may reduce traffic. She called for a “Copernican shift” in how success is measured in SEO, given that we can’t rely on traffic and rankings as the main KPIs in SEO anymore. Bianca supported this with a data-driven approach to content valuation: using a math equation to project conversion potential and using percentile tiers to evaluate the importance of content (rather than just search volume/traffic).

8. Digital PR, Category Ownership, and Campaigns

Carrie Rose reiterated that digital PR is an amplifier – not a creator – of brand equity. As stated before, she encouraged linking PR campaigns to product/category pages rather than always just linking to homepages. She advocated for creating “category leaders,” by establishing them as experts in specific categories, and using influencer campaigns on TikTok and digital PR to drive links and visibility to category pages.

She gave examples like the company Dojo, where only 16% of links point to category pages, and advised brands to aim higher. Rose also recommended leveraging viral TikTok trends and user-generated content to attract ‘Top Stories’ citations – one of the most coveted SERP features for online publishers.

9. Negative SEO and Manipulation Tactics

Robert Hansen (R Snake), Managing Director at Grossman Ventures, gave a unique talk about how negative SEO does indeed exist, despite what Google has stated to the contrary. R Snake shared harmful tactics such as DMCA spam, registering RBL entries, and injecting negative brand terms into autocomplete suggestions. Basically, bombarding competing SEO teams and making their work impossible can be a devastating form of negative SEO.

Other negative SEO tactics included over-automation, physical attacks, client-side attacks, and the ease of manipulating LLM outputs through prompt bias or training contamination.

10. SEO Trends and AI Futures

Garrett Sussman (Director of Marketing at iPullRank) advised creating distinct pages for each intent type, noting that this improves rankings and allows for precision. He also encouraged brands to diversify their traffic mix by investing in direct and referral sources.

Garrett noted how Google CEO, Sundar Pichai, indicated that long-tail search volume is increasing, aligning with the trend toward conversational search. As users move from ‘keywordese’ to natural dialogue, LLMs are expected to become the dominant interface.

However, there were some concerns about the conversational nature of LLMs. For example, Garrett referenced recent comments from Sam Altman (Open AI CEO) about reducing ChatGPT’s sycophantic tone – a concern about AI systems telling users only what they want to hear. As a result of this feedback, OpenAI recently rolled back ChatGPT -4o.

Speakers also discussed emerging SEO automation tools like OTTO, Windsurf, multi-modal search projects like Project Astra, and advanced prompt engineering via modular microagents such as the Factuality Agent, Brand Voice Agent, and others.

11. The Vicious Cycles of SEO

After 39 incredible talks by mindblowing speakers, I gave the final talk at SEO Week and reminded the audience about the risks of taking SEO shortcuts – plus the potential for both search engines and LLMs to introduce anti-spam measures as spammers learn new tricks for manipulating LLM responses.

I traced a recurring cycle in the SEO world: new tactics emerge and are shared, competitors replicate them, and Google eventually responds with algorithm updates to neutralize spammy or manipulative practices. This cycle – seen over the years with keyword stuffing, link schemes, and now AI content -often leads to a degradation of user experience. Between 2022 and 2025, SEO saw a surge in low-effort strategies like parasite SEO, programmatic content, and largescale creation of low quality, AI AI-generated articles. Many of these tactics relied on exploiting loopholes in site authority or scale. In response, Google launched major updates including the 2023 Helpful Content Update and the 2024 Core & Spam Updates, both of which penalized content created primarily for search engines. Google’s new spam policy, Site Reputation Abuse, further cracked down on various types of third-party content spam, devastating many sites.

Looking ahead, I emphasized a shift away from chasing short-term hacks and toward building authentic, original content that’s useful to real users – and legible to language models. As Google rolls out AI Overviews and LLM-powered search, traditional metrics like rankings and CTRs are declining in importance. Instead, SEOs must now monitor their visibility within AI summaries, optimize for sentiment and accuracy in LLM responses, and ensure their brands are well represented across podcasts, social media, and highly cited content. I believe the future of SEO (and digital marketing) lies in personal branding, original research, multimedia content, and community trust – factors that LLMs increasingly rely on to determine authority and relevance.

SEO Week: A Whirlwind of SEO & AI Knowledge & Insights

NYC SEO Week showed that the future of SEO & AI belongs to those who understand both the structure of language and the logic of machines. From semantic clustering to bot behavior analytics, from ‘AX optimization’ to generative content curation, SEO is no longer about rankings and traffic alone. SEO is evolving quickly, and understanding and adopting these new tools is essential to staying ahead of the curve.